- Smarter Worldブログ

- Post-Quantum Cryptography: Physical Attacks and Countermeasures

Post-Quantum Cryptography: Physical Attacks and Countermeasures

In our previous blog, Prepare for the Quantum Breakthrough with Post-Quantum Cryptography, we explored the impact that emerging quantum computers will have on our cryptographic infrastructure. Here, we'll look back to past difficulties with securely deploying cryptographic implementations in practice, relate them to the upcoming transition to post-quantum cryptography and provide an outlook on some of the novel challenges that await our cryptographic infrastructure.

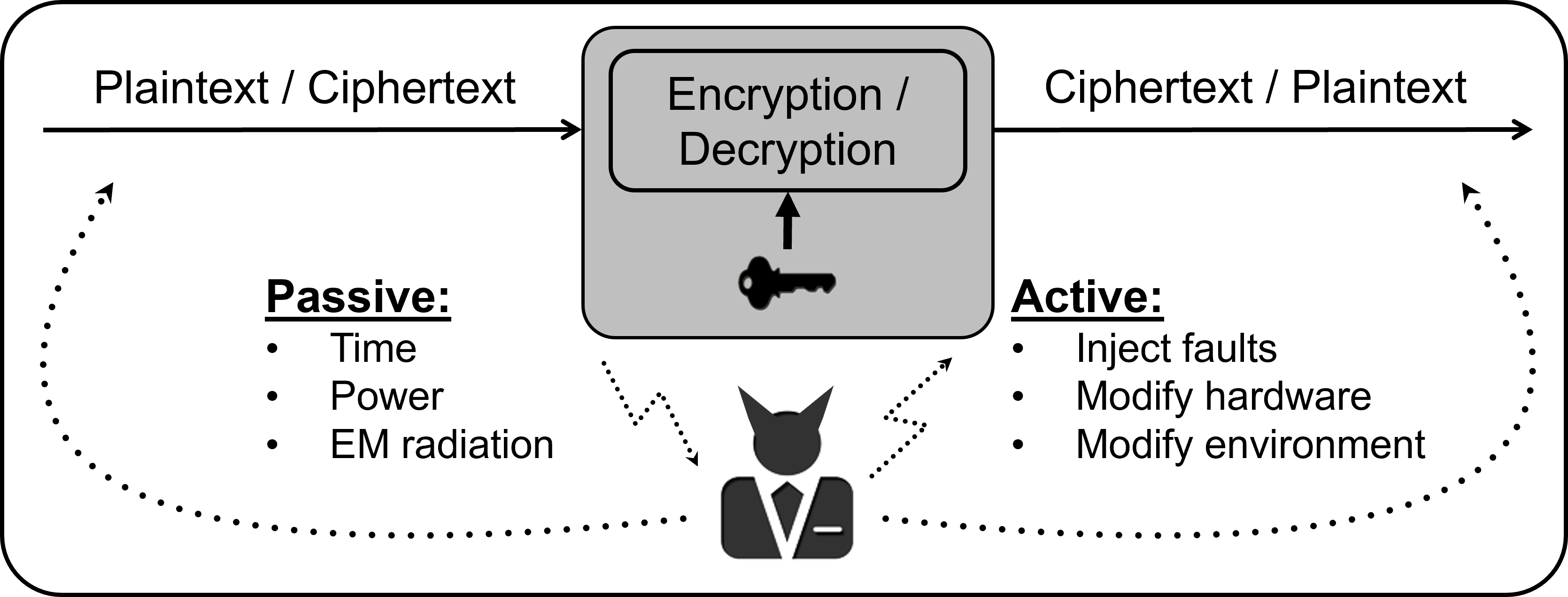

Cryptographic algorithms are typically implemented in a dedicated, highly secure segment of a system. Users of the system (including malicious ones) can submit inputs and observe the outputs, but cannot obtain information about any internal values, including the secret cryptographic data. In other words, the cryptographic engine is implemented as a “black box", and in effect, all contemporary and relevant post-quantum cryptographic algorithms are believed to provide sufficient security guarantees against a black box attacker.

Opening the Black Box

When such an algorithm is implemented and deployed in a physical system, however, the original black box assumption is no longer fulfilled. Paul Kocher demonstrated this in his seminal publication in 1996, in which he inferred secret information by simply measuring the execution time of the cryptographic implementation. Kocher observed that depending on the value of the secret key, the execution time would vary and this timing difference reveals information about the inside of the black box. This concept of measuring the physical characteristic of the device while it is processing secret information has been formalized under the term side-channel analysis and has experienced a boom after Kocher’s initial work. The approach has been extended to include other sources of information besides the timing behavior, like the power consumption or electro-magnetic emanation, and increasingly relies on sophisticated statistical tools, like machine learning, to process the measurements and extract even the slighted trace of secret data. Besides passively measuring the computation, it has been shown that actively disturbing a cryptographic computation can also lead to successful recovery of sensitive information. These so-called fault injection attacks range from simple glitches in the power line of the device to sophisticated disturbances on the transistor-level via a laser.

The potency of this physical threat has been highlighted by numerous breaks of commercial systems where an attacker could cause significant damage by stealing cryptographic secrets. In this case, any implementation of such schemes in systems—where an attacker has physical access to the device, such as a hotel room card—requires dedicated countermeasures to thwart these physical attacks. While these can come with a significant overhead on area and performance, it is outweighed by the costs and impact of a potential successful attack. For customers to have guarantees on the provided physical security of a device, certification entities provide assurance for the level of protection that is expected.

Get more insights. Read more about NXP and post-quantum cryptography to get started.

Surviving the FO-Calypse

With standardized public-key cryptography of today, it is known how to efficiently achieve protection against increasingly potent physical attacks. This results in secure products that are certified to provide a certain level of protection. In the future, you can expect NXP to work toward the same level of focus in physical security for the upcoming post-quantum standards. We are already investigating the challenges that come with new families of algorithms. Besides the more traditional aspects—a significant increase in key sizes that comes with memory constraints, for example—NXP is exploring the standard candidates regarding their susceptibility to both side-channel and fault injection attacks. This has already led to articles in multiple scientific publications, which we will detail in future blog posts.

One specific challenge that affects some of the post-quantum finalists is also the topic of the recently contributed presentation at Real World Crypto 2022 titled “Surviving the FO-calypse: Securing PQC Implementations in Practice”.

These finalists all rely on the Fujisaki-Okamoto (FO) transform to achieve security in the black box model. At its core, the transformation adds a check to the algorithm that verifies if the original input was valid. While it works fine in this black box setting, it has some significant implications in the physical world. This check strongly enhances the information that a physical attacker can extract from measurements, and therefore, complicates the integration of dedicated countermeasures for these schemes. In effect, achieving the same level of protection we are used to in hardened RSA and ECC implementations is much more costly and involved for PQC algorithms that are based on this FO transform. Combining this increased overhead with the already larger and more expensive post-quantum algorithms poses a non-trivial challenge to the designer of such systems and could have a significant impact on the embedded industry.

In our presentation, we detail this challenge through a concrete case study and also discuss potential ways to overcome it: demonstrating how we can help transition to a post-quantum secure era. More details can be found in the corresponding slide set and recording of the presentation.

Watch for additional articles in this ongoing series about post-quantum computing cryptography. You may also be interested in:

- Prepare for the Quantum Breakthrough with Post-Quantum Cryptography

- Post-Quantum Cryptography: What You Need to Know (webinar)

- The Emergence of Post-Quantum Cryptography (white paper)

- Post-Quantum Cryptography: Setting the Future Security Standards

Authors

Tobias Schneider

Tobias Schneider is a senior cryptographer at the NXP Competence Center for Cryptography and Security (CCC&S) in the CTO organization at NXP Semiconductors. He is also a member of the Post-Quantum Cryptography team. He received his PhD in cryptography from Ruhr-Universität Bochum in 2017 and authored over 25 international publications. His research topics include the physical security of cryptographic implementations, in particular of post-quantum cryptography and cyber resilience.

Joppe W. Bos

Joppe W. Bos is a Technical Director and cryptographer at the Competence Center Crypto & Security (CCC&S) in the CTO organization at NXP Semiconductors. Based in Belgium, he is the technical lead of the Post-Quantum Cryptography team, and has authored over 20 patents and 50 academic papers. He is the co-editor of the IACR Cryptology ePrint Archive.

Christine Cloostermans

Christine Cloostermans is a senior cryptographer at the Competence Center for Cryptography and Security (CCC&S) in the CTO organization at NXP Semiconductors. She acquired her doctorate from TU Eindhoven on topics related to lattice-based cryptography. Christine is a co-author on 10+ scientific publications, and has given many public presentations in the area of post-quantum cryptography. Beyond PQC, she is active in multiple standardization efforts, including IEC 62443 for the Industrial domain, ISO 18013 for the mobile driver’s license, and the Access Control Working Group of the Connectivity Standards Alliance.

Joost Renes

Joost Renes is a Senior Cryptographer at the Competence Center for Cryptography and Security (CCC&S) in the CTO organization at NXP Semiconductors. He holds a PhD in Cryptography from Radboud University in the Netherlands and is a developer of the NIST standardization proposal SIKE. He works towards solving the many challenges related to securely implementing post-quantum cryptography on resource-constrained systems and integrating them into security-critical protocols.