アプリケーション・ノート (5)

-

i.MX 8M Plus NPU Warmup Time[AN12964]

ファクト・シート (1)

-

eIQ 機械学習ソフトウェア開発環境[EIQ-FS]

お客様の素早い設計とより早い製品化を実現する、技術情報と専門知識をご紹介します。

Integrated into NXP's Yocto development environment, eIQ software delivers TensorFlow Lite for NXP’s MPU platforms. Developed by Google to provide reduced implementations of TensorFlow (TF) models, TF Lite uses many techniques for achieving low latency such as pre-fused activations and quantized kernels that allow smaller and (potentially) faster models. Furthermore, like TensorFlow, TF Lite utilizes the Eigen library to accelerate matrix and vector arithmetic.

TF Lite defines a model file format, based on FlatBuffers. Unlike TF’s protocol buffers, FlatBuffers have a smaller memory footprint allowing better use of cache lines, leading to faster execution on NXP devices. TF Lite supports a subset of TF neural network operations, and also supports recurrent neural networks (RNNs) and long short-term memory (LSTM) network architectures.

2 ダウンロード

注: より快適にご利用いただくために、ソフトウェアのダウンロードはデスクトップで行うことを推奨します。

セキュアファイルの読み込み中、しばらくお待ちください。

クイック・リファレンス ドキュメンテーションの種類

6 ドキュメント

コンパクトリスト

セキュアファイルの読み込み中、しばらくお待ちください。

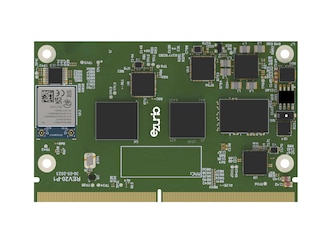

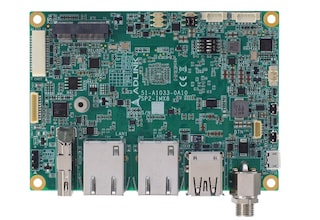

1-5 件/全 13 ハードウェア提供

本ソフトウェアをサポートするパートナー企業の一覧は、以下のサイトをご覧ください。 パートナーマーケットプレイス.

1-5 件/全 6 ソフトウェア提供

To find a complete list of our partners that support this software, please see our パートナーマーケットプレイス.

1 エンジニアリング・サービス

There are no results for this selection.

本ソフトウェアをサポートするパートナー企業の一覧は、以下のサイトをご覧ください。 パートナーマーケットプレイス.